文章大纲

当前 Kubernetes 已经更新到了 v1.29,使用 kubeadm 来部署一个三节点的集群。

集群的规划

集群使用三台虚拟机,网络和角色信息如下:

| 主机名 | 网络 | 操作系统 | 角色 |

|---|---|---|---|

| master01.example.com | 192.168.121.61/24 | CentOS9S | Master |

| worker01.example.com | 192.168.121.87/24 | CentOS9S | Worker |

| worker02.example.com | 192.168.121.29/24 | CentOS9S | Worker |

准备阶段

在具体部署之前需要对虚拟机做相应的基础的配置。

系统基本配置

首先节点之间应互相能够解析主机名,在不具备 DNS 的前提下,可修改 /etc/hosts 文件:

192.168.121.61 master01.example.com master01

192.168.121.87 worker01.example.com worker01

192.168.121.29 worker02.example.com worker02接下来需要禁用 SELinux,在 9 版本中 disable SELinux 略有不同:

sudo setenforce 0

sudo grubby --update-kernel ALL --args selinux=0随后需要防火墙放行相应的端口 ports and protocols,或者关闭主机防火墙:

sudo systemctl disable --now firewalld启用 ipvs 支持

ipvs 已经加入了内核,为 kube-proxy 开启 ipvs 的前提需要加载以下的内核模块:

cat >> /etc/modules-load.d/ipvs.conf<<EOF

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

EOF手动加载模块:

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack_ipv4各个节点还需安装 ipset ,为了便于查看 ipvs 的代理规则,最好也安装 ipvsadm :

dnf install -y ipset ipvsadm安装容器运行时

容器运行时有很多种,这里选择安装 CRI-O。

添加 overlay 和 br_netfilter 内核模块到 /etc/modules-load.d/crio.conf 中:

cat >>/etc/modules-load.d/crio.conf<<EOF

overlay

br_netfilter

EOF手动加载内核模块:

modprobe overlay

modprobe br_netfilteroverlay 模块:用于支持 overlay 文件系统,被很多容器运行时包括 CRI-O 依赖。overlay 文件系统允许通过在彼此之上创建层来允许有效利用存储空间。这意味着多个容器可以共享基础镜像,同时拥有自己的可写顶层。br_netfilter 模块:用于桥接网络的网络地址转换 (NAT) 的 iptables 规则需要该模块。桥接网络是将多个容器连接到单个网络中的一种方式。通过使用桥接网络,网络中的所有容器都可以相互通信以及与外界通信。

为容器提供网络功能:

cat >>/etc/sysctl.d/kubernetes.conf<<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF用于 Kubernetes 和 CRI-O 正常工作的重要内核参数:

net.bridge.bridge-nf-call-ip6tables = 1和net.bridge.bridge-nf-call-iptables = 1使内核能够使用桥接网络在容器之间转发网络流量。Kubernetes 和 CRI-O 使用桥接网络为给定主机上的所有容器创建单个网络接口。net.ipv4.ip_forward启用 IP 转发,允许数据包从一个网络接口转发到另一个网络接口。Kubernetes 需要在集群中的不同 Pod 之间路由流量。

使用 sysctl 使内核参数生效:

sysctl --system添加 Kubernetes 源:

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.29/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.29/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni EOF添加 CRI-O 源:

cat <<EOF | tee /etc/yum.repos.d/cri-o.repo

[cri-o]

name=CRI-O

baseurl=https://pkgs.k8s.io/addons:/cri-o:/$PROJECT_PATH/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/addons:/crio:/$PROJECT_PATH/rpm/repodata/repomd.xml.key

EOF安装依赖包从官方源:

dnf install -y container-selinux安装 kubeadm kubelet 和 cri-o :

dnf install -y cri-o kubelet kubeadm kubectl修改 CRI-O 使用指定的 pause image,编辑 /etc/crio/crio.conf.d/10-crio.conf :

[crio.image]

signature_policy = "/etc/crio/policy.json"

pause_image="registry.aliyuncs.com/google_containers/pause:3.9"

[crio.runtime]

default_runtime = "crun"

[crio.runtime.runtimes.crun]

runtime_path = "/usr/bin/crio-crun"

monitor_path = "/usr/bin/crio-conmon"

allowed_annotations = [ "io.containers.trace-syscall", ]

[crio.runtime.runtimes.runc]

runtime_path = "/usr/bin/crio-runc" monitor_path = "/usr/bin/crio-conmon"启动 CRI-O:

systemctl enable --now cri-o.service安装 crictl 工具:

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.29.0/crictl-v1.29.0-linux-amd64.tar.gz

tar -xzvf crictl-v1.29.0-linux-amd64.tar.gz

install -m 755 crictl /usr/local/bin/crictl使用 crictl 对容器运行时进行测试:

crictl --runtime-endpoint=unix:///var/run/crio/crio.sock version

Version: 0.1.0

RuntimeName: cri-o

RuntimeVersion: 1.29.0

RuntimeApiVersion: v1使用 kubeadm 部署 Kubernetes

当前各个节点已经安装好了 kubeadm 和 kubelet 。

使用 kubelet --help 获取 kubelet 命令行参数,可以看到大量的参数都处于了 DEPRECATED ,官方推荐使用 --config 指定运行配置文件,在配置文件中指定原先的参数。

在 1.22 版本之前,Swap 必须禁用,否则 kubelet 将无法启动,从 1.22 开始引入了 NodeSwap 功能,可以通过在启用了 NodeSwap feature gate 在节点上启用交换内存,此外必须禁用 failSwapon 配置。

可以配置 memorySwap.swapBehavior 以定义节点使用 swap 的方式:

memorySwap:

swapBehavior: UnlimitedSwapswapBehavior 可用的参数有:

UnlimitedSwap(默认):Kubernetes 工作负载可以使用它们请求的所有交换内容,最多达到系统限制。LimitedSwap:Kubernetes 工作负载对交换内容的利用受到限制。只允许使用 Burstable QoS(可突发 QoS)的 Pod 使用 Swap,如果没有为memorySwap定义配置,且启用了NodeSwap特性,kubelet 将默认应用与UnlimitedSwap设置相同的行为。

使用 kubeadm init 初始化集群

使用 kubeadm config print init-defaults --component-configs KubeletConfiguration 可以打印集群初始化默认使用的配置。

从默认的配置中,使用 imageRepository 在集群初始化时拉取所需镜像,可以修改为国内镜像仓库地址。

同时设置 failSwapOn 为 false 和 KubeProxyConfiguration 的 mode 为 ipvs 。

创建 kubeadm.yaml 配置文件:

apiVersion: kubeadm.k8s.io/v1beta3

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.121.61

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/crio/crio.sock

taints:

- effect: PreferNoSchedule

key: node-role.kubernetes.io/master

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

kubernetesVersion: 1.29.0

imageRepository: registry.aliyuncs.com/google_containers

networking:

podSubnet: 10.244.0.0/16

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

failSwapOn: false

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs使用 kubeadm config images list --config kubeadm.yaml 列出所需的 image:

kubeadm config images list --config kubeadm.yaml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.29.0

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.29.0

registry.aliyuncs.com/google_containers/kube-scheduler:v1.29.0

registry.aliyuncs.com/google_containers/kube-proxy:v1.29.0

registry.aliyuncs.com/google_containers/coredns:v1.11.1

registry.aliyuncs.com/google_containers/pause:3.9

registry.aliyuncs.com/google_containers/etcd:3.5.10-0在各个节点上使用 kubeadm config images pull --config kubeadm.yaml 预先拉取 image :

kubeadm config images pull --config kubeadm.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.29.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.29.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.29.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.29.0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.11.1

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.10-0选择 master01 作为 Master Node,执行 kubeadm init 来初始化集群:

kubeadm init --config kubeadm.yamlbootstrap 的过程:

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "super-admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 4.001865 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master01.example.com as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master01.example.com as control-plane by adding the taints [node-role.kubernetes.io/master:PreferNoSchedule]

[bootstrap-token] Using token: 8qz9xg.nd55zi0uq9i4iy61

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.121.61:6443 --token 8qz9xg.nd55zi0uq9i4iy61 \

--discovery-token-ca-cert-hash sha256:06398adc59682b72540fcddd6cda78b53df8fc2b5e1c5d08daad69495f377300根据输出的内容基本上可以看出手动初始化安装一个 Kubernetes 集群所需要的关键步骤。 其中有以下关键内容:[preflight] 拉取相关 image[cert] 生成相关证书[kubeconfig] 生成相关 kubeconfig 文件[etcd] 使用 /etc/kubernetes/manifests 中的文件创建静态 etcd Pod[control-plane] 使用 /etc/kubernetes/manifests 中的文件创建 kube-apiserver kube-controller-manager kube-scheduler 的静态 Pod[bootstrap-token] 生成 token,用于后续使用 kubeadm join 使用[addons] 安装基本的插件,CoreDNS kube-proxy

以下的命令是配置常规用户如何访问集群:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config如果是 root 用户的话,可以直接通过环境变量的方式进行访问:

export KUBECONFIG=/etc/kubernetes/admin.conf验证集群组件的健康状态:

kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy ok安装包管理器 helm

Helm 是 Kubernetes 常用的包管理器。

wget https://get.helm.sh/helm-v3.13.3-linux-amd64.tar.gz

tar xzvf helm-v3.13.3-linux-amd64.tar.gz

sudo install -m 755 linux-amd64/helm /usr/local/bin/helm部署 Pod Network 组件 Calico

选择 Calico 作为 Kubernetes 的 Pod 网络组件,使用 helm 在集群中安装 Calico。

下载 tigera-operator 的 helm chart:

wget https://github.com/projectcalico/calico/releases/download/v3.27.0/tigera-operator-v3.27.0.tgz使用 helm 安装 calico:

helm install calico tigera-operator-v3.27.0.tgz -n kube-system --create-namespace

NAME: calico

LAST DEPLOYED: Fri Feb 2 08:01:17 2024

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None确认所有的 Pod 处于 runing 的状态:

kubectl get pods -n kube-system | grep tigera-operator [0/1966]

tigera-operator-55585899bf-gknl8 1/1 Running 0 3m48s

[vagrant@master01 ~]$ kubectl get pods -n calico-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-7974f5dc8b-g8v69 1/1 Running 0 3m43s

calico-node-x8xt9 1/1 Running 0 3m44s

calico-typha-58bf4f4fc5-m7hk9 1/1 Running 0 3m44s

csi-node-driver-r4f7r 2/2 Running 0 3m44s将 calicoctl 安装为 kubectl 的插件:

curl -o kubectl-calico -O -L "https://github.com/projectcalico/calico/releases/download/v3.27.0/calicoctl-linux-amd64"

sudo install -m 755 kubectl-calico /usr/local/bin/kubectl-calico验证插件是否正常工作:

kubectl calico -h验证 Kubernetes DNS 是否可用

进入到一个临时 Pod 中:

kubectl run curl --image=radial/busyboxplus:curl -it执行 nslookup 命令能返回正确的解析:

[ root@curl:/ ]$ nslookup kubernetes.default

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local如果验证失败的话,尝试运行以下命令来清理防火墙:

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X向 Kubernetes 集群中添加节点

使用下面命令生成 kubeadm join 所需的 token:

kubeadm token create --print-join-command

kubeadm join 192.168.121.61:6443 --token jryd3e.qor9ctw2fnv6zzjo --discovery-token-ca-cert-hash sha256:06398adc59682b72540fcddd6cda78b53df8fc2b5e1c5d08daad69495f377300在 worker01 和 worker02 上执行 kubeadm join 命令:

kubeadm join 192.168.121.61:6443 --token jryd3e.qor9ctw2fnv6zzjo --discovery-token-ca-cert-hash sha256:06398adc59682b72540fcddd6cda78b53df8fc2b5e1c5d08daad69495f377300验证集群节点已加入,且 calico 相关的 Pod 已经运行在了各个节点:

[vagrant@master01 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01.example.com Ready control-plane 117m v1.29.1

worker01.example.com Ready <none> 37s v1.29.1

worker02.example.com Ready <none> 12s v1.29.1

[vagrant@master01 ~]$ kubectl get pod -n calico-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-7974f5dc8b-g8v69 1/1 Running 0 55m 10.85.0.5 master01.example.com <none> <none>

calico-node-fxl4f 1/1 Running 0 4m46s 192.168.121.87 worker01.example.com <none> <none>

calico-node-nvfpw 1/1 Running 0 4m21s 192.168.121.29 worker02.example.com <none> <none>

calico-node-x8xt9 1/1 Running 0 55m 192.168.121.61 master01.example.com <none> <none>

calico-typha-58bf4f4fc5-j9nxk 1/1 Running 0 4m16s 192.168.121.87 worker01.example.com <none> <none>

calico-typha-58bf4f4fc5-m7hk9 1/1 Running 0 55m 192.168.121.61 master01.example.com <none> <none>

csi-node-driver-94tzw 2/2 Running 0 4m21s 10.85.0.2 worker02.example.com <none> <none>

csi-node-driver-gb9tg 2/2 Running 0 4m46s 10.85.0.2 worker01.example.com <none> <none>

csi-node-driver-r4f7r 2/2 Running 0 55m 10.85.0.4 master01.example.com <none> <none>Kubernetes 常用组件部署

安装完集群后,在 Kubernetes 最常用的组件是 ingress-nginx 和 dashboard 。

使用 Helm 部署 ingress-nginx

为了便于将集群中的服务暴露到集群外部,需要使用 Ingress。

使用 Helm 将 ingress-nginx 部署到 Kubernetes 上。

Nginx Ingress Controller 将被部署到 Kubernetes 的边缘节点上。

将 worker01 设为边缘节点,打上标签:

kubectl label node worker01.example.com node-role.kubernetes.io/edge=下载 ingress-nginx 的 helm chart:

wget https://github.com/kubernetes/ingress-nginx/releases/download/helm-chart-4.9.0/ingress-nginx-4.9.0.tgz查看该 chart 可自定义的配置:

helm show values ingress-nginx-4.9.0.tgz对 ingress-nginx 进行配置的自定义:

controller:

ingressClassResource:

name: nginx

enabled: true

default: true

controllerValue: "k8s.io/ingress-nginx"

admissionWebhooks:

enabled: false

replicaCount: 1

image:

registry: docker.io

image: unreachableg/registry.k8s.io_ingress-nginx_controller

tag: "v1.9.5"

digest: sha256:bdc54c3e73dcec374857456559ae5757e8920174483882b9e8ff1a9052f96a35

hostNetwork: true

nodeSelector:

node-role.kubernetes.io/edge: ''

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx-ingress

- key: component

operator: In

values:

- controller

topologyKey: kubernetes.io/hostname

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Exists

effect: PreferNoSchedule将 image 地址指向 docker.io 因为默认的 registry.k8s.io 无法访问。

设置 nginx ingress controller 使用宿主机网络没有指定 externalIP。

检查 Pod 的运行状态然后访问 worker01 的 IP:

kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-6445445cb8-s4h84 1/1 Running 0 2m53s

curl http://192.168.121.87

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx</center>

</body>

</html>返回 404 即部署完成。

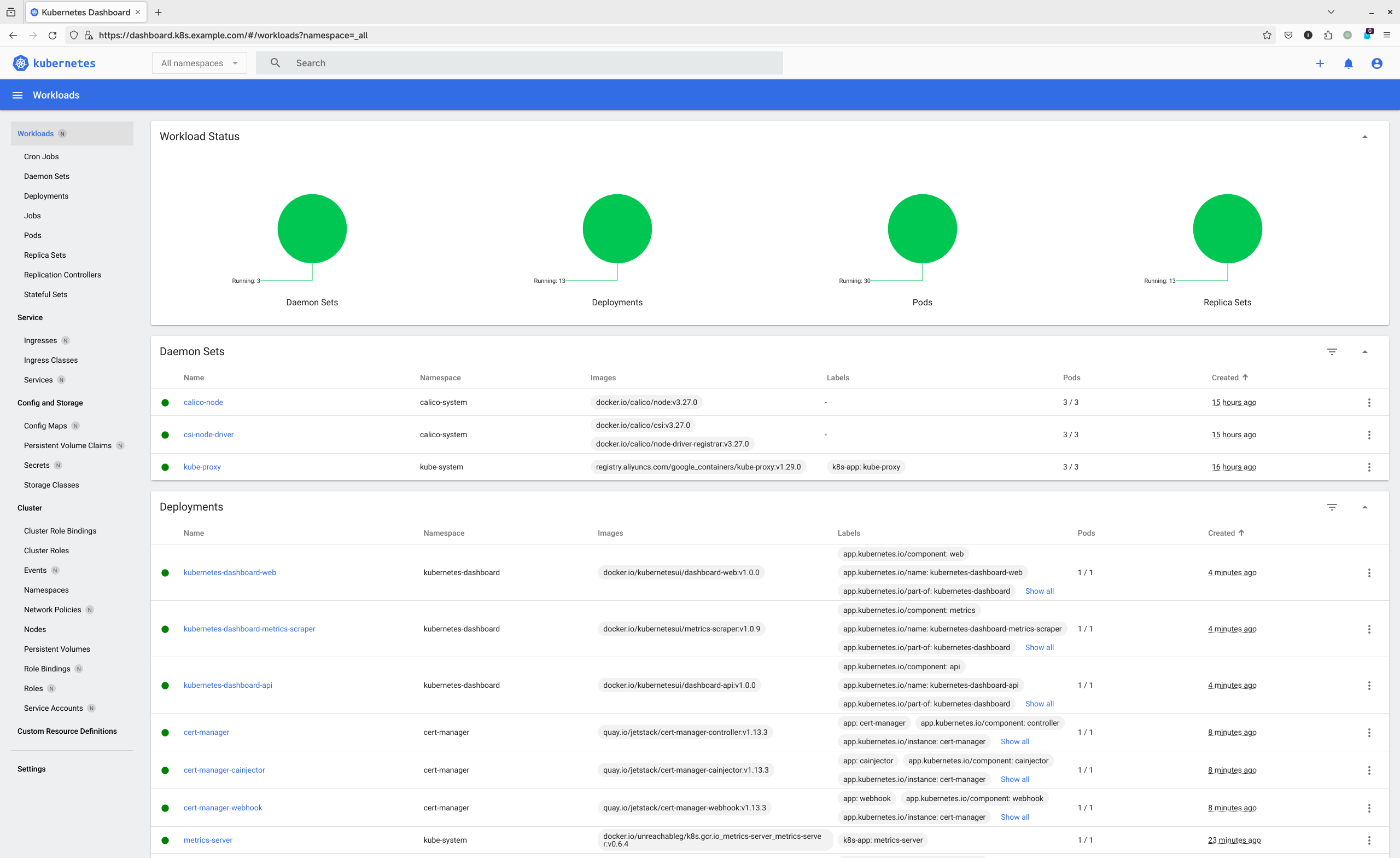

使用 Helm 部署 dashboard

首先部署 metrics-server :

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.4/components.yaml将 components.yaml 中的 image 修改成 docker.io/unreachableg/k8s.gcr.io_metrics-server_metrics-server:v0.6.4。

在容器启动参数中添加 --kubelet-insecure-tls 。

通过 kubectl apply 创建资源:

kubectl apply -f components.yaml当 Pod 起来后就可以查看集群和 Pod 的 metrics 的信息:

[vagrant@master01 ~]$ kubectl get pod -n kube-system | grep metrics

metrics-server-7d686f4d9d-fvhrk 1/1 Running 0 81s

[vagrant@master01 ~]$ kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master01.example.com 69m 1% 2347Mi 30%

worker01.example.com 12m 0% 1002Mi 13%

worker02.example.com 15m 0% 871Mi 11%

[vagrant@master01 ~]$ kubectl top pod -n kube-system

NAME CPU(cores) MEMORY(bytes)

coredns-857d9ff4c9-2sbc2 1m 22Mi

coredns-857d9ff4c9-88j99 1m 76Mi

etcd-master01.example.com 6m 122Mi

kube-apiserver-master01.example.com 21m 498Mi

kube-controller-manager-master01.example.com 4m 167Mi

kube-proxy-9p9v8 3m 80Mi

kube-proxy-bnw6v 4m 82Mi

kube-proxy-l2btg 3m 83Mi

kube-scheduler-master01.example.com 1m 75Mi

metrics-server-7d686f4d9d-fvhrk 1m 27Mi

tigera-operator-55585899bf-gknl8 1m 105Mik8s dashboard的v3版本现在默认使用cert-manager和nginx-ingress-controller。

安装 cert-manager :

wget https://github.com/cert-manager/cert-manager/releases/download/v1.13.3/cert-manager.yaml

kubectl apply -f cert-manager.yaml确保 cert-manager 的所有pod启动正常:

kubectl get pods -n cert-manager

NAME READY STATUS RESTARTS AGE

cert-manager-5c9d8879fd-rdk2v 1/1 Running 0 40s

cert-manager-cainjector-6cc9b5f678-ksd29 1/1 Running 0 40s

cert-manager-webhook-7bb7b75848-rfv4r 1/1 Running 0 40s获取 Dashboard 清单文件:

wget https://raw.githubusercontent.com/kubernetes/dashboard/v3.0.0-alpha0/charts/kubernetes-dashboard.yaml将 ingress 中的 host 替换想分配给 dashboard 的域名,随后应用清单文件:

kubectl apply -f kubernetes-dashboard.yaml等待 Dashboard 相关 Pod 正常启动:

kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

kubernetes-dashboard-api-8586787f7-mkww2 1/1 Running 0 55s

kubernetes-dashboard-metrics-scraper-6959b784dc-cs5xh 1/1 Running 0 55s

kubernetes-dashboard-web-6b6d549b4-68v8n 1/1 Running 0 55s创建管理员 sa:

kubectl create serviceaccount kube-dashboard-admin-sa -n kube-system

kubectl create clusterrolebinding kube-dashboard-admin-sa \

--clusterrole=cluster-admin --serviceaccount=kube-system:kube-dashboard-admin-sa创建集群管理员登录 dashboard 所需的 token:

kubectl create token kube-dashboard-admin-sa -n kube-system --duration=87600h使用上面命令返回的 Token 来登录 dashboard: