文章大纲

ODF(OpenShift Data Foundation) 是为 OpenShift 提供高度集成的存储和数据服务。

ODF 概览

ODF 可以通过存储类给应用提供各种类型的存储:

- 块存储

- 共享文件系统

- 多云对象存储

ODF 由以下组件构成:

- Ceph,提供块存储、共享分布式文件系统和对象存储

- Ceph CSI,用于管理 PV 和 PVC 的调配和生命周期

- NooBaa 提供多云对象网关

- ODF、Rook-Ceph 和 NooBaa Operator,用于初始化和管理 ODF 服务

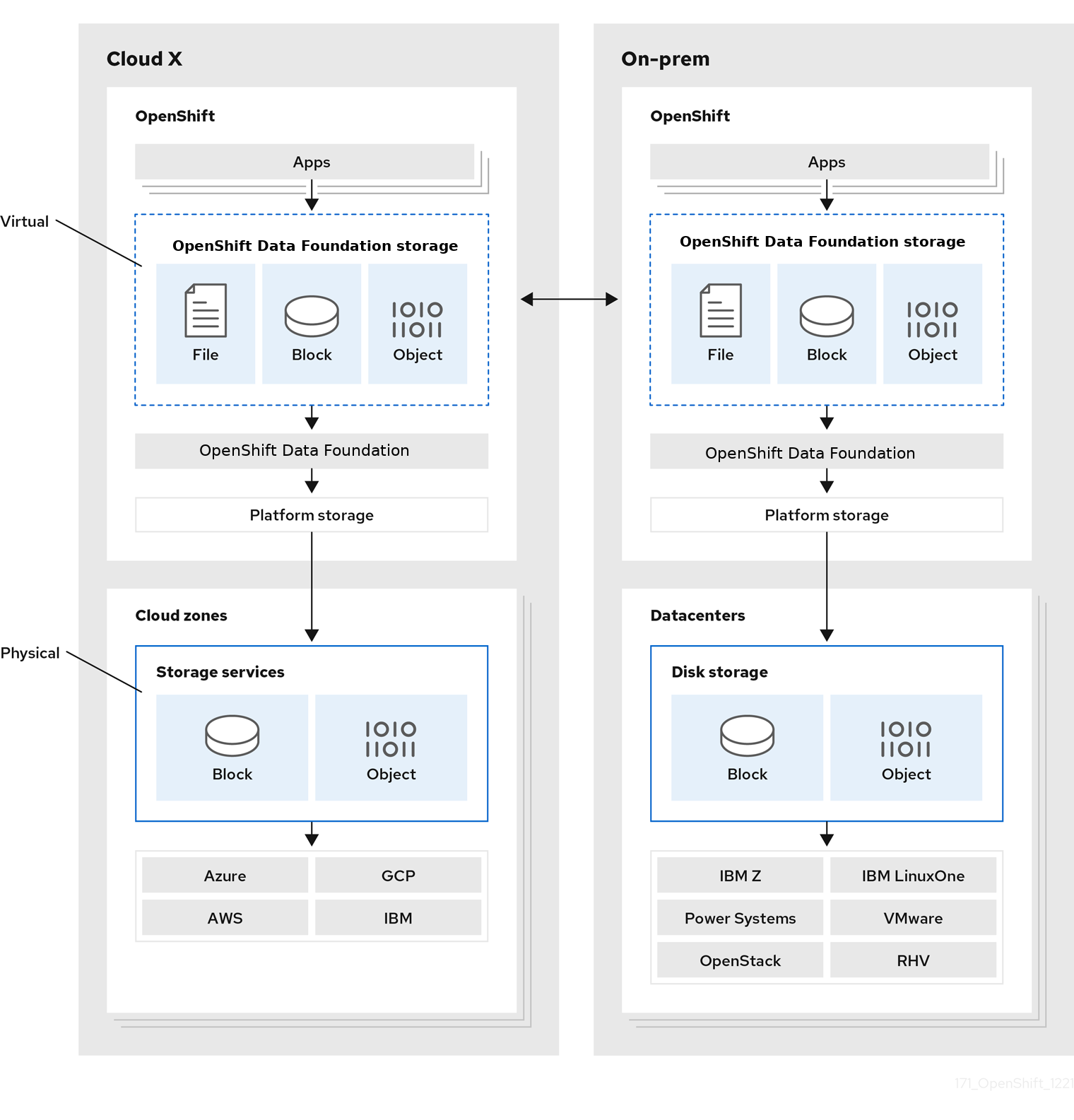

ODF 架构

ODF 架构示意图:

ODF 可以利用云供应商提供的存储设备和物理主机设备。

ODF 部署方式有多种:

- 以外部模式部署

- 基于各种云平台存储部署

- 基于 OpenStack 部署

- 基于裸机部署

这里体验基于裸机方式,使用内部-附加设备的模式进行部署。

使用本地存储设备部署 ODF

存储节点准备工作

首先确保存储节点中有未使用的存储设备:

oc debug node/worker01

Starting pod/worker01-debug ...

To use host binaries, run `chroot /host`

Pod IP: 192.168.50.13

If you don't see a command prompt, try pressing enter.

sh-4.4# chroot /host

sh-4.4# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

vda 252:0 0 80G 0 disk

|-vda1 252:1 0 1M 0 part

|-vda2 252:2 0 127M 0 part

|-vda3 252:3 0 384M 0 part /boot

`-vda4 252:4 0 79.5G 0 part /sysroot

vdb 252:16 0 500G 0 disk

vdc 252:32 0 500G 0 disk

sh-4.4#在部署 ODF 之前还需规划准备要使用的存储节点,将 cluster.ocs.openshift.io/openshift-storage= 标签应用于存储节点:

oc label nodes <node-name> cluster.ocs.openshift.io/openshift-storage=为所有的 worker 节点设置该标签:

oc label nodes -l node-role.kubernetes.io/worker= cluster.ocs.openshift.io/openshift-storage=

node/worker01 labeled

node/worker02 labeled

node/worker03 labeled为 ODF 提供节点本地存储设备

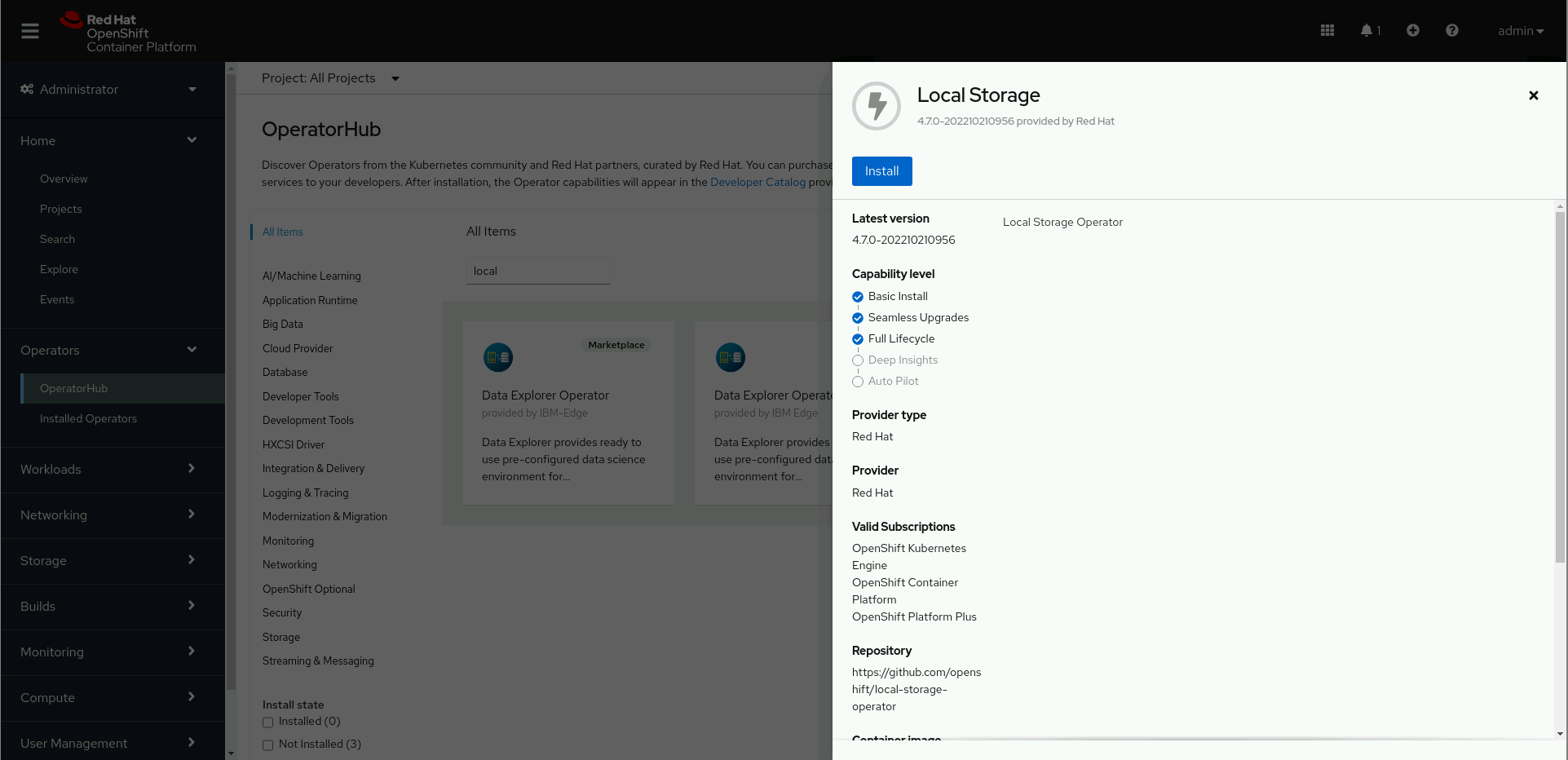

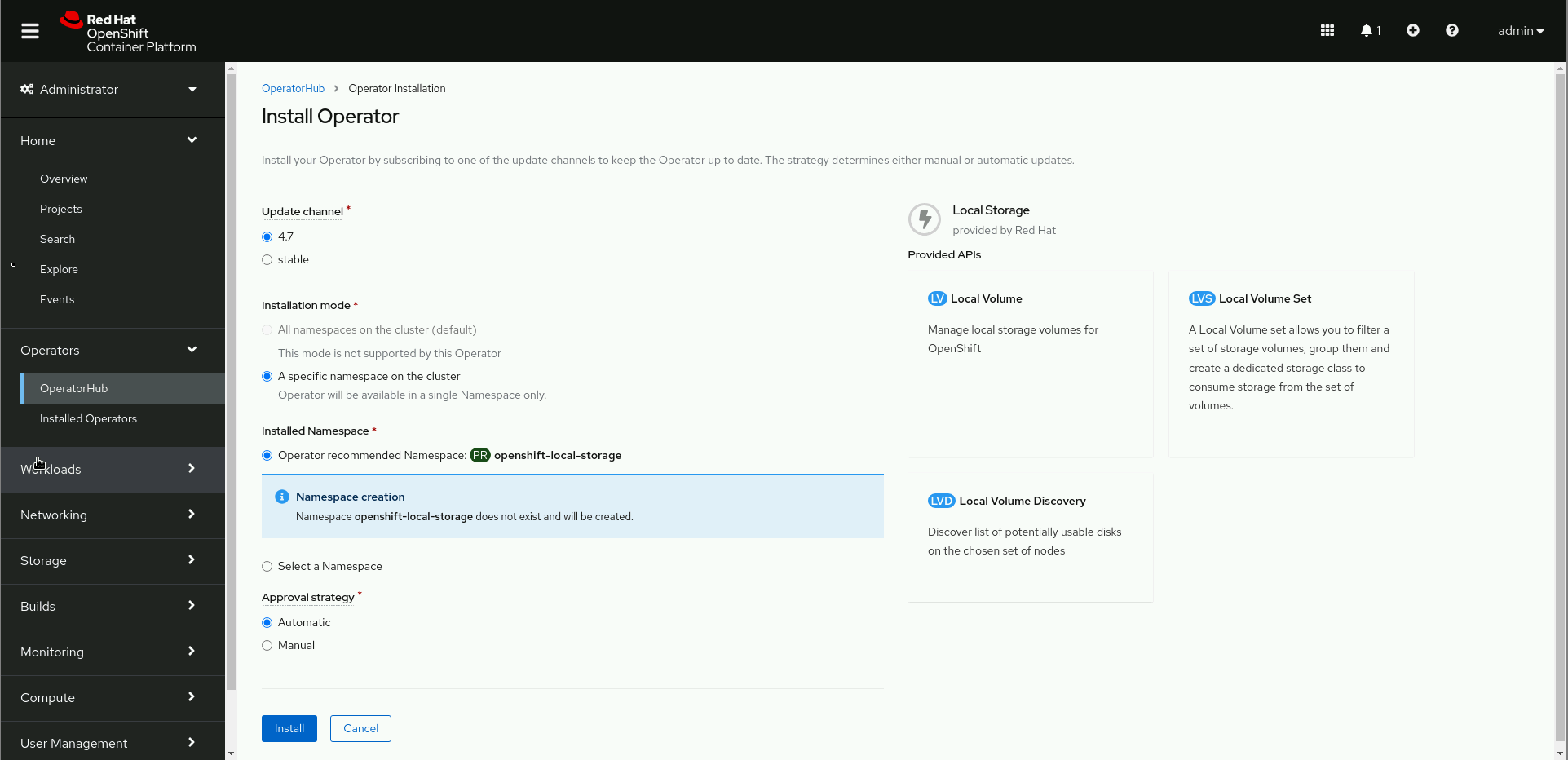

在本地存储设备上创建 ODF 集群之前,需要先安装 Local Storage Operator,用于发现和提供 ODF 所需的本地存储设备。

安装时选项保持默认即可:

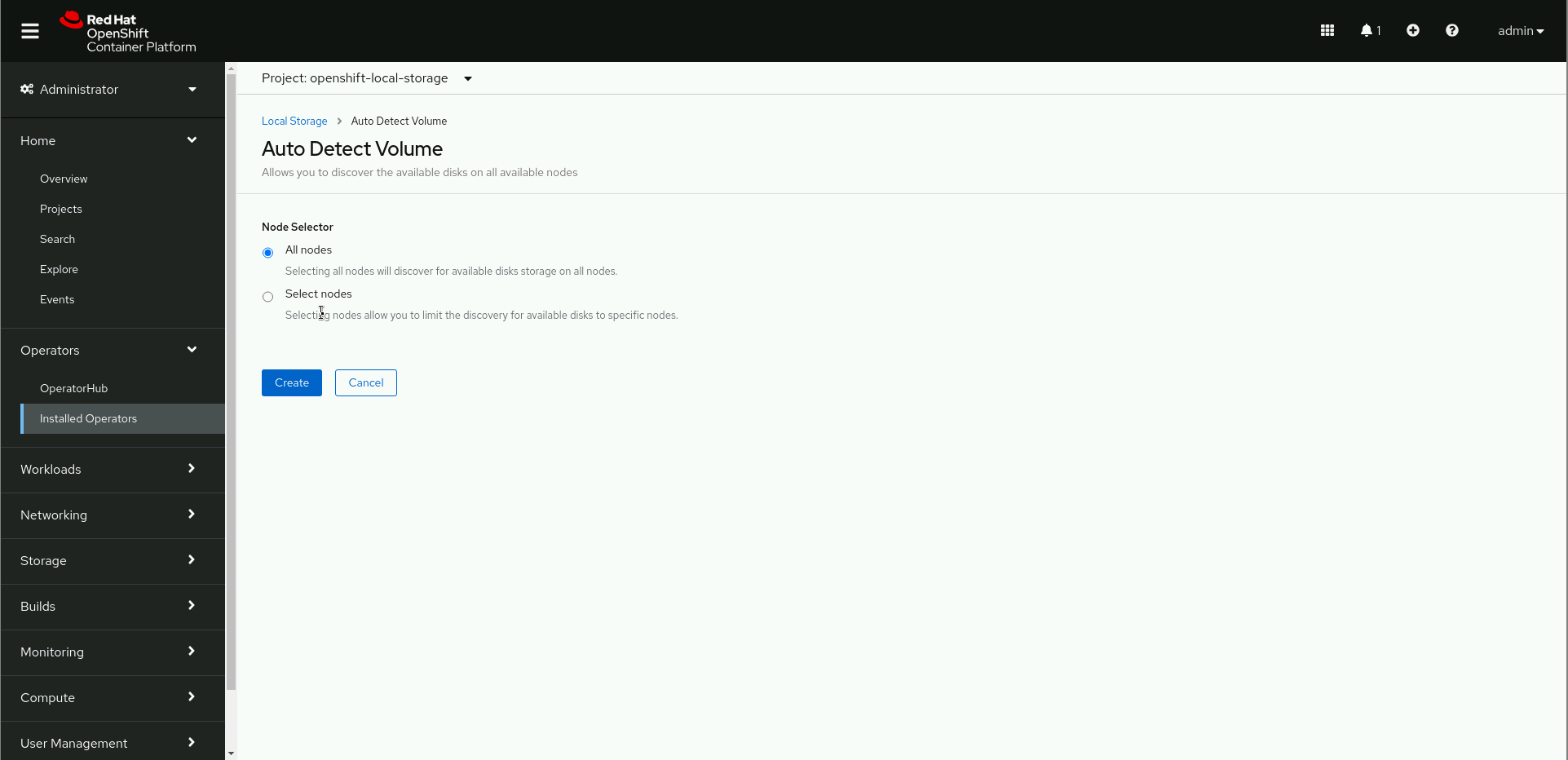

安装完毕后,创建 Local Volume Discovery,在 Node Selector 字段中选择所有节点,创建后,将发现所选节点上的所有存储设备。

在 openshift-local-storage project 中会调度 diskmaker-discovery Pod:

oc get pod -n openshift-local-storage

NAME READY STATUS RESTARTS AGE

diskmaker-discovery-cxl48 1/1 Running 0 66s

diskmaker-discovery-dlsxq 1/1 Running 0 66s

diskmaker-discovery-sfq48 1/1 Running 0 66s

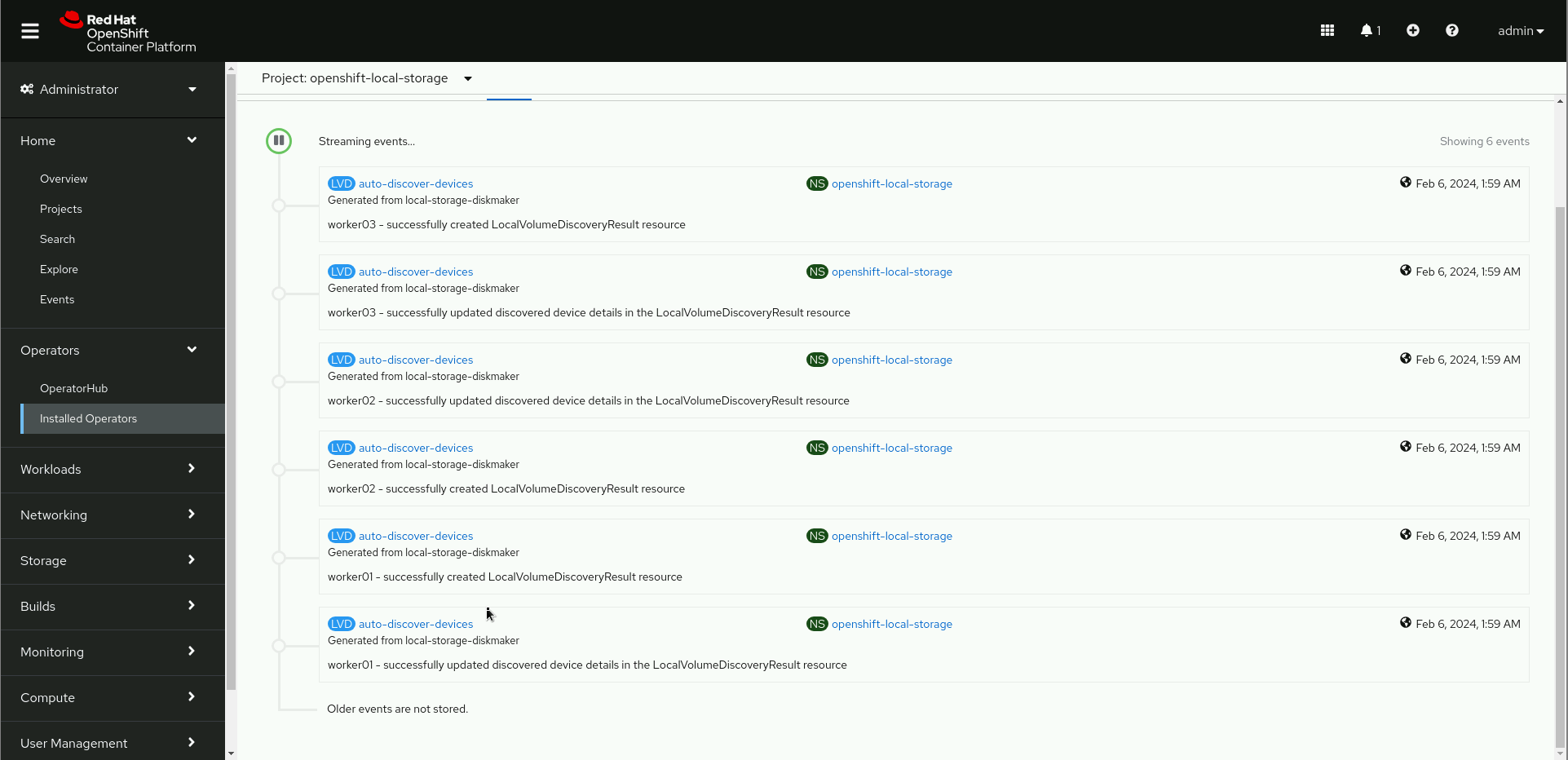

local-storage-operator-5d759c6bf4-jsw6m 1/1 Running 0 113s几分钟后可以看到磁盘设备发现已完成:

查看 LocalVolumeDiscoveryResult 资源:

oc get LocalVolumeDiscoveryResult -n openshift-local-storage

NAME AGE

discovery-result-worker01 13m

discovery-result-worker02 13m

discovery-result-worker03 13m查看其中一个资源详细信息:

oc describe LocalVolumeDiscoveryResult discovery-result-worker01 -n openshift-local-storage | grep Path

Path: /dev/vda1

Path: /dev/vda2

Path: /dev/vda3

Path: /dev/vda4

Path: /dev/vdb

Path: /dev/vdc已经识别到了 /dev/vdb 和 /dev/vdc 。

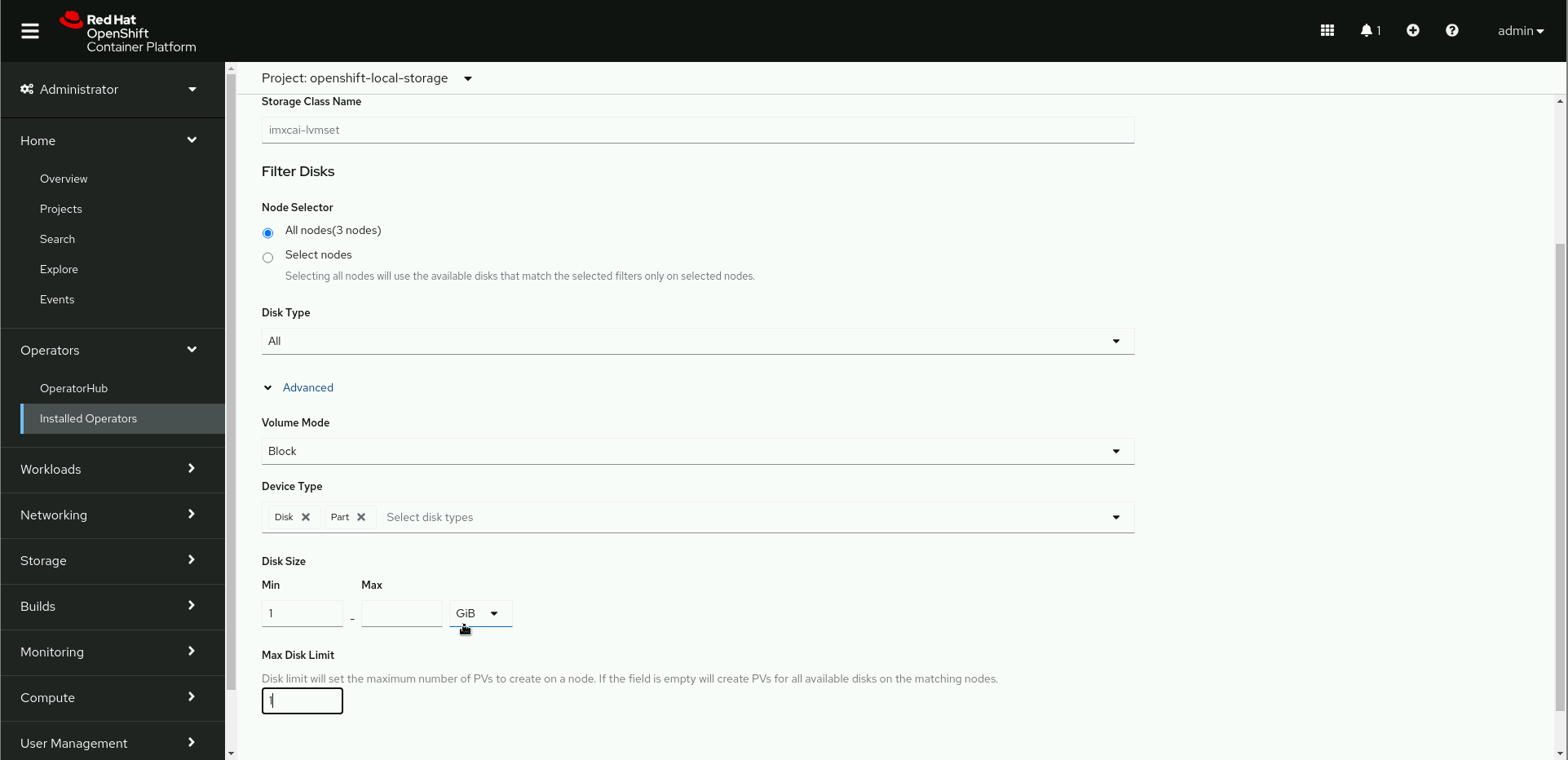

发现设备后,继续创建 Local Volume Set,用于创建对应的存储类,节点选择所有节点:

同时在 Max Disk Limit 出设置了 1 ,也就是一个节点只会提供一个 PV,前面有两块盘,当前只使用一块,后续一块用于扩展存储。

随后可以看到对应创建的存储类和与本地设备关联的 PV:

oc get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

imxcai-lvmset kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 88s

[student@workstation ~]$ oc get pv | grep imxcai-lvmset

local-pv-373f3bb3 500Gi RWO Delete Available imxcai-lvmset 31s

local-pv-77d7b4ac 500Gi RWO Delete Available imxcai-lvmset 32s

local-pv-8786c248 500Gi RWO Delete Available imxcai-lvmset 32s安装 OCS Operator

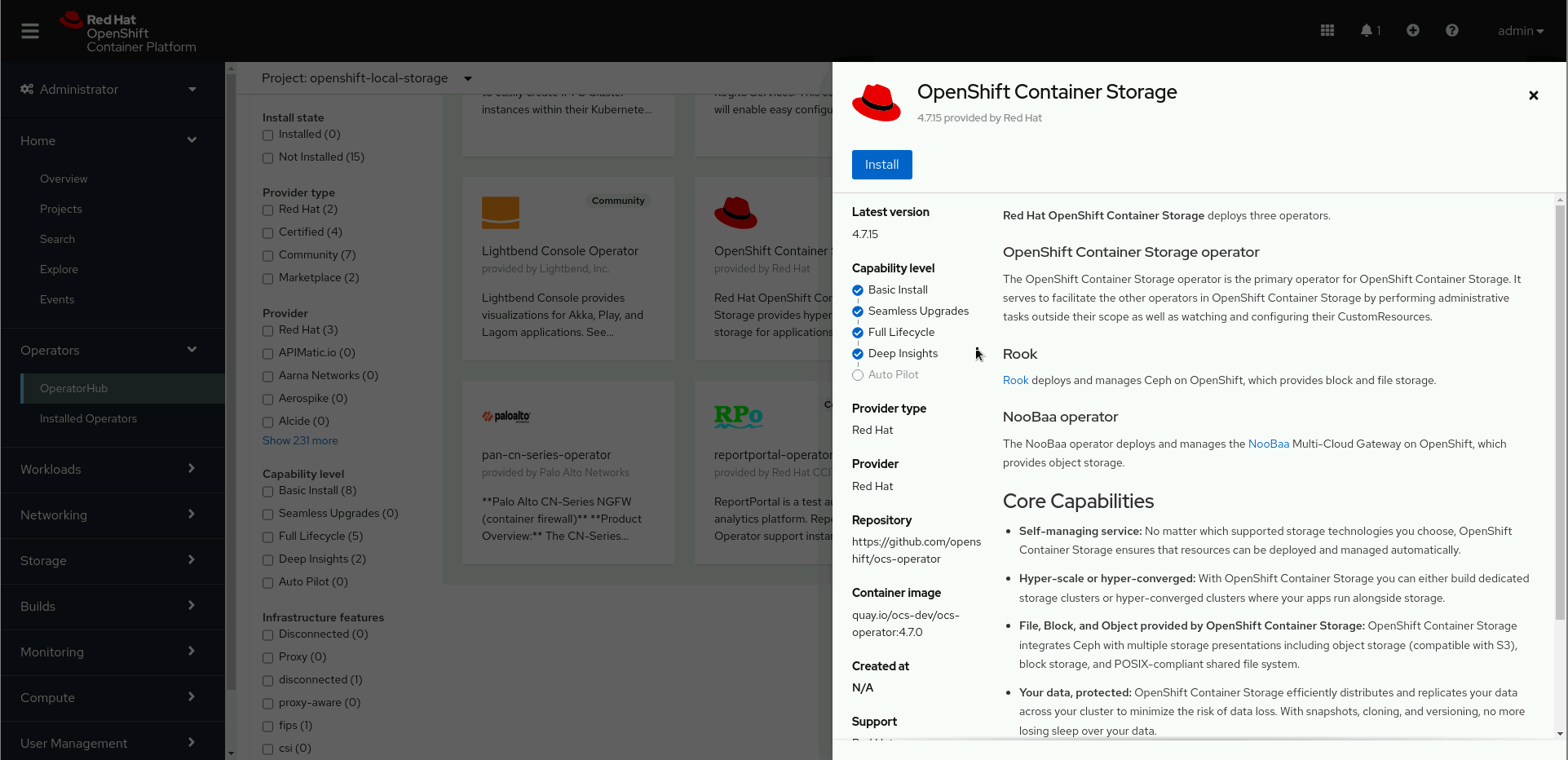

OCS Operator 会将 Rook-Ceph Operator 和 NooBaa Operator 作为依赖而安装。

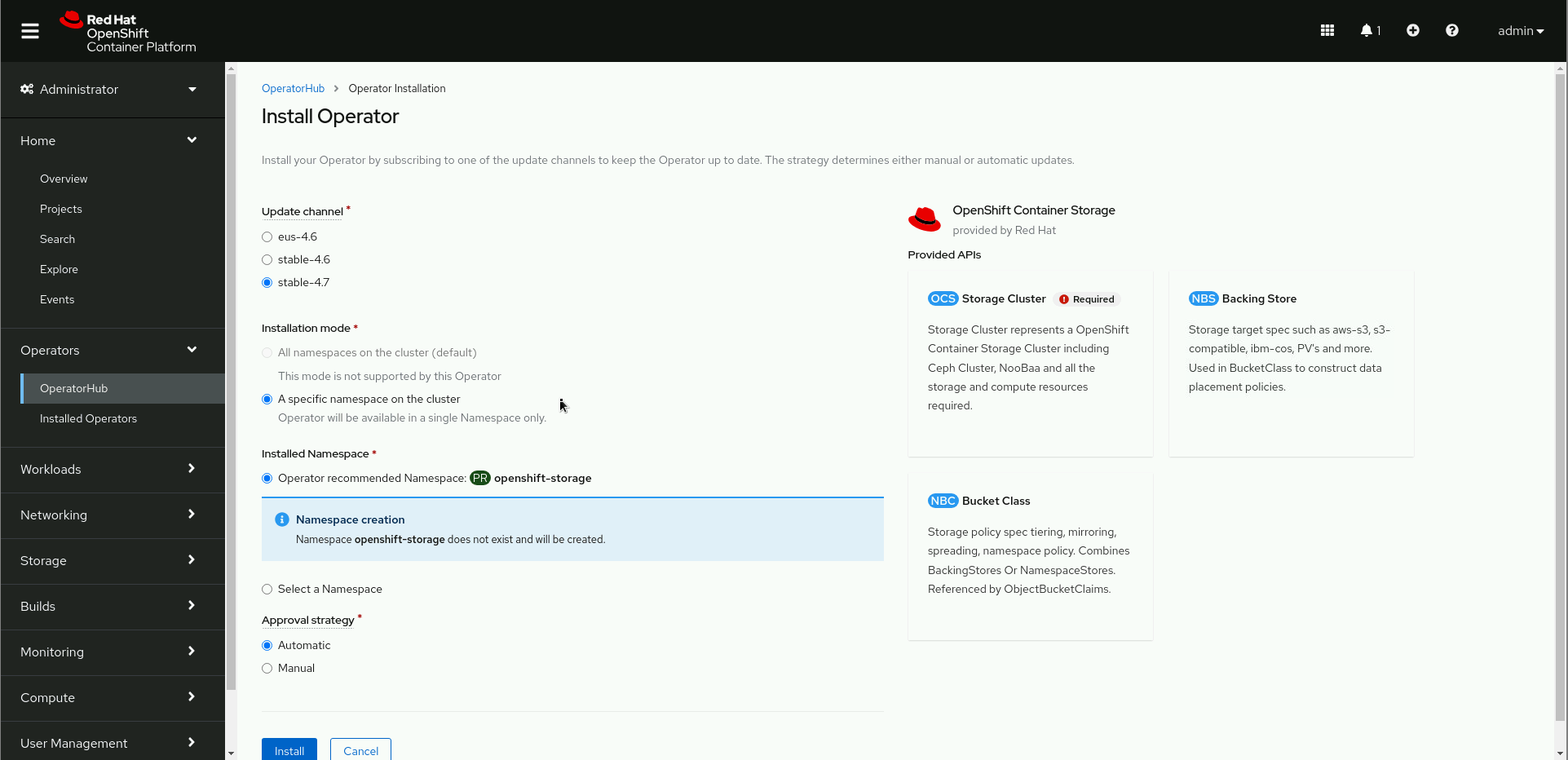

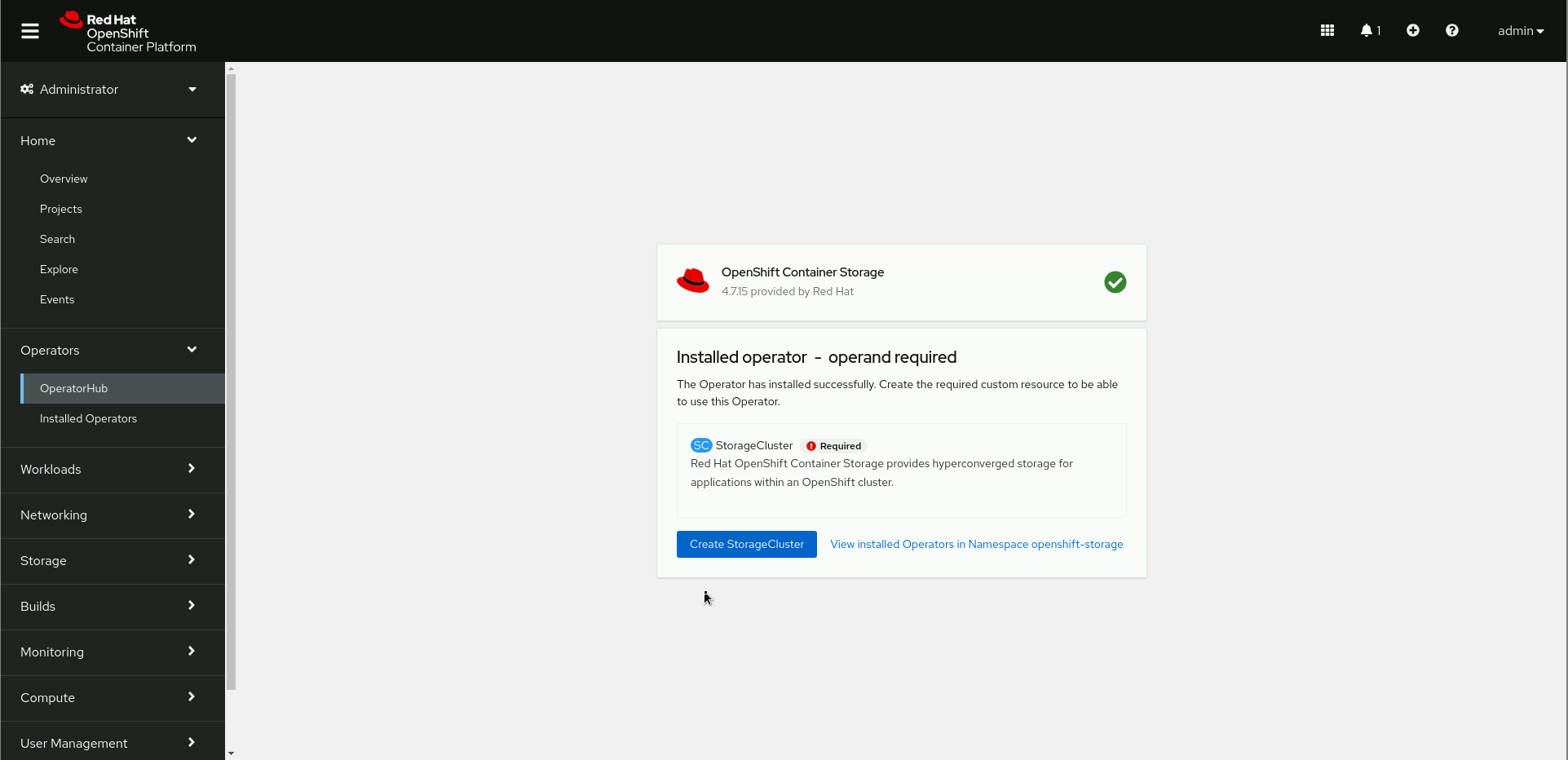

在 OperatorHub 中找到并安装 OCS Operator 安装时,配置保持默认。

安装完成后可以在 openshift-storage project 中查看状态:

oc get operator,pods -n openshift-storage

NAME AGE

operator.operators.coreos.com/local-storage-operator.openshift-local-storage 30m

operator.operators.coreos.com/ocs-operator.openshift-storage 3m41s

NAME READY STATUS RESTARTS AGE

pod/noobaa-operator-5b8d6d8849-fvp9v 1/1 Running 0 2m41s

pod/ocs-metrics-exporter-647dd97877-ggsfn 1/1 Running 0 2m41s

pod/ocs-operator-7cf74b897d-9tkfc 1/1 Running 0 2m41s

pod/rook-ceph-operator-7df548cc9-gb8tr 1/1 Running 0 2m41s创建 StorageCluster

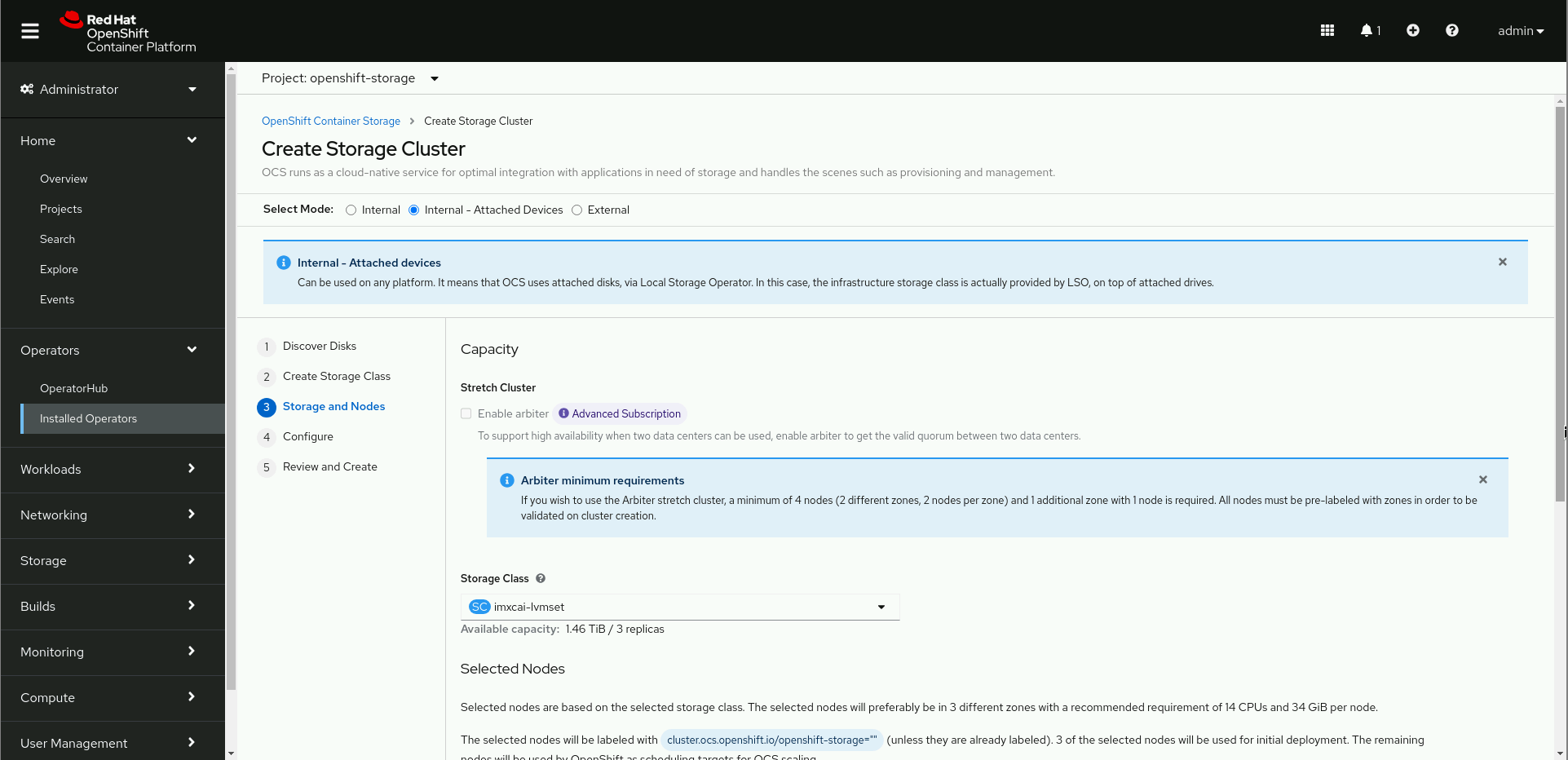

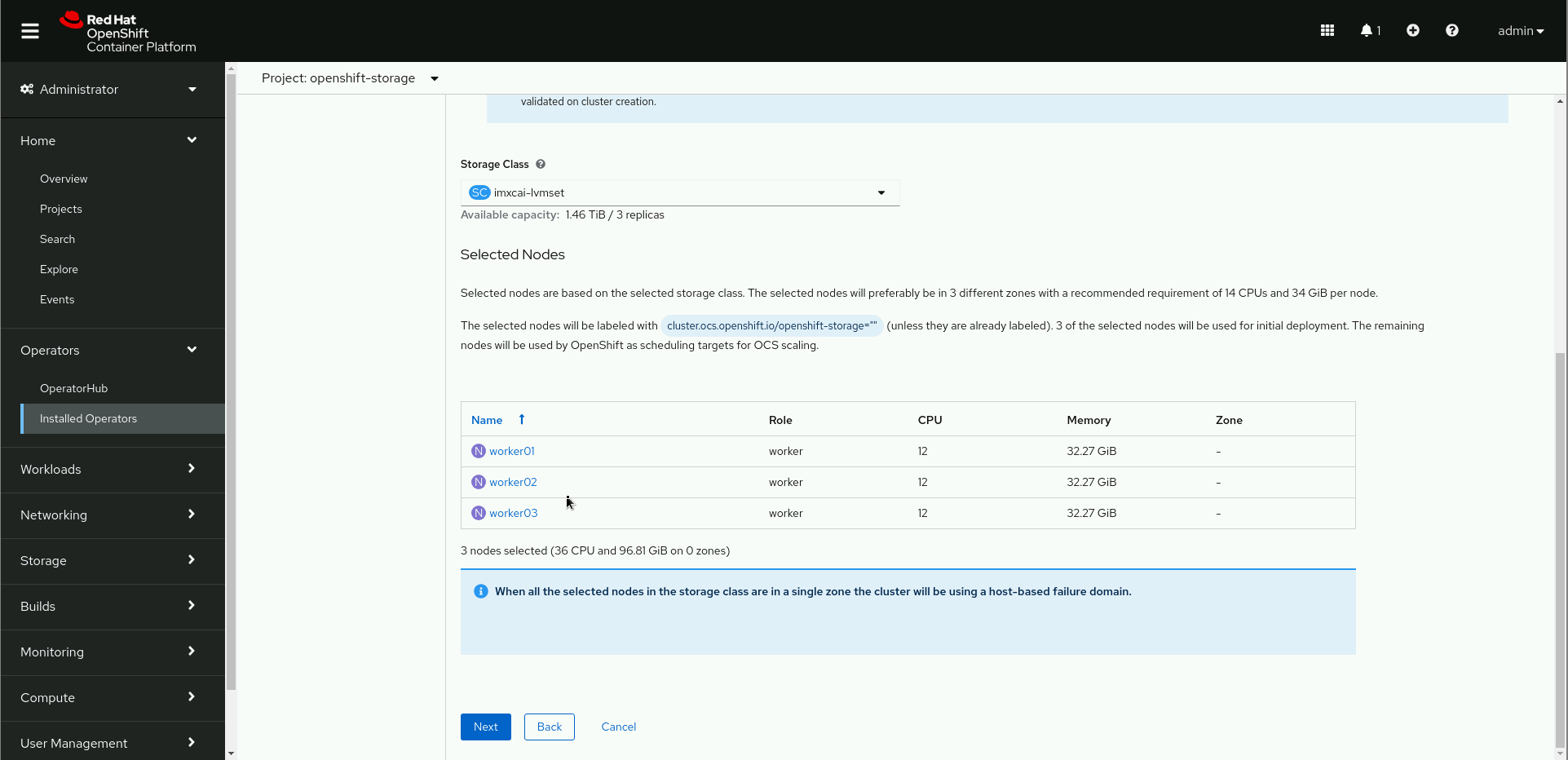

OCS Operator 安装完成后,点击创建 Storage Cluster,选择内部-附加设备模式,然后选择前面通过 Local Storage Operator 创建的 imxcai-lvmset 存储类,等待所有 worker 节点列出后,选择下一步,然后完成创建即可。

验证 ODF 部署

查看 openshift-storage project 中的 storagecluster 和 pod 资源的状态:

oc get storagecluster,pods -n openshift-storage

NAME AGE PHASE EXTERNAL CREATED AT VERSION

storagecluster.ocs.openshift.io/ocs-storagecluster 5m25s Ready 2024-02-06T07:29:28Z 4.7.0

NAME READY STATUS RESTARTS AGE

pod/csi-cephfsplugin-7d7dm 3/3 Running 0 5m18s

pod/csi-cephfsplugin-provisioner-7bf74694d-dxmvd 6/6 Running 0 5m17s

pod/csi-cephfsplugin-provisioner-7bf74694d-mdjqz 6/6 Running 0 5m17s

pod/csi-cephfsplugin-rmmjv 3/3 Running 0 5m18s

pod/csi-cephfsplugin-v5lhp 3/3 Running 0 5m18s

pod/csi-rbdplugin-4cl7d 3/3 Running 0 5m18s

pod/csi-rbdplugin-8mc4t 3/3 Running 0 5m18s

pod/csi-rbdplugin-provisioner-7d4d6d4945-fjgll 6/6 Running 1 5m18s

pod/csi-rbdplugin-provisioner-7d4d6d4945-sw8r9 6/6 Running 0 5m18s

pod/csi-rbdplugin-rlh5q 3/3 Running 0 5m18s

pod/noobaa-core-0 1/1 Running 0 3m10s

pod/noobaa-db-pg-0 1/1 Running 0 3m10s

pod/noobaa-endpoint-5955657996-8fbcw 1/1 Running 0 93s

pod/noobaa-operator-5b8d6d8849-fvp9v 1/1 Running 0 9m40s

pod/ocs-metrics-exporter-647dd97877-ggsfn 1/1 Running 0 9m40s

pod/ocs-operator-7cf74b897d-9tkfc 1/1 Running 0 9m40s

pod/rook-ceph-crashcollector-worker01-659bd69798-4sj6m 1/1 Running 0 4m2s

pod/rook-ceph-crashcollector-worker02-864c949c65-9cn8v 1/1 Running 0 3m40s

pod/rook-ceph-crashcollector-worker03-6d8d7d55fb-kpk6r 1/1 Running 0 3m53s

pod/rook-ceph-mds-ocs-storagecluster-cephfilesystem-a-5d6f66782pqmc 2/2 Running 0 2m50s

pod/rook-ceph-mds-ocs-storagecluster-cephfilesystem-b-6d879c66jpfg5 2/2 Running 0 2m49s

pod/rook-ceph-mgr-a-5868c56d55-5qgl5 2/2 Running 0 3m26s

pod/rook-ceph-mon-a-57fbf58f7-fcmq2 2/2 Running 0 4m12s

pod/rook-ceph-mon-b-7f966d6d84-bfn28 2/2 Running 0 3m53s

pod/rook-ceph-mon-c-778b5f5f8b-dt6gl 2/2 Running 0 3m40s

pod/rook-ceph-operator-7df548cc9-gb8tr 1/1 Running 0 9m40s

pod/rook-ceph-osd-0-cfb48979c-vcqc6 2/2 Running 0 3m14s

pod/rook-ceph-osd-1-54b679bb85-st2qw 2/2 Running 0 3m12s

pod/rook-ceph-osd-2-5569b7cc47-mqw46 2/2 Running 0 3m12s

pod/rook-ceph-osd-prepare-ocs-deviceset-imxcai-lvmset-0-data-0q8gj7 0/1 Completed 0 3m24s

pod/rook-ceph-osd-prepare-ocs-deviceset-imxcai-lvmset-0-data-1drqvg 0/1 Completed 0 3m24s

pod/rook-ceph-osd-prepare-ocs-deviceset-imxcai-lvmset-0-data-2f77k9 0/1 Completed 0 3m24s

pod/rook-ceph-rgw-ocs-storagecluster-cephobjectstore-a-59d8885brdqn 2/2 Running 0 2m16s查看 ODF 默认创建的四个存储类:

oc get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

imxcai-lvmset kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 18m

ocs-storagecluster-ceph-rbd openshift-storage.rbd.csi.ceph.com Delete Immediate true 5m45s

ocs-storagecluster-ceph-rgw openshift-storage.ceph.rook.io/bucket Delete Immediate false 5m45s

ocs-storagecluster-cephfs openshift-storage.cephfs.csi.ceph.com Delete Immediate true 5m45s

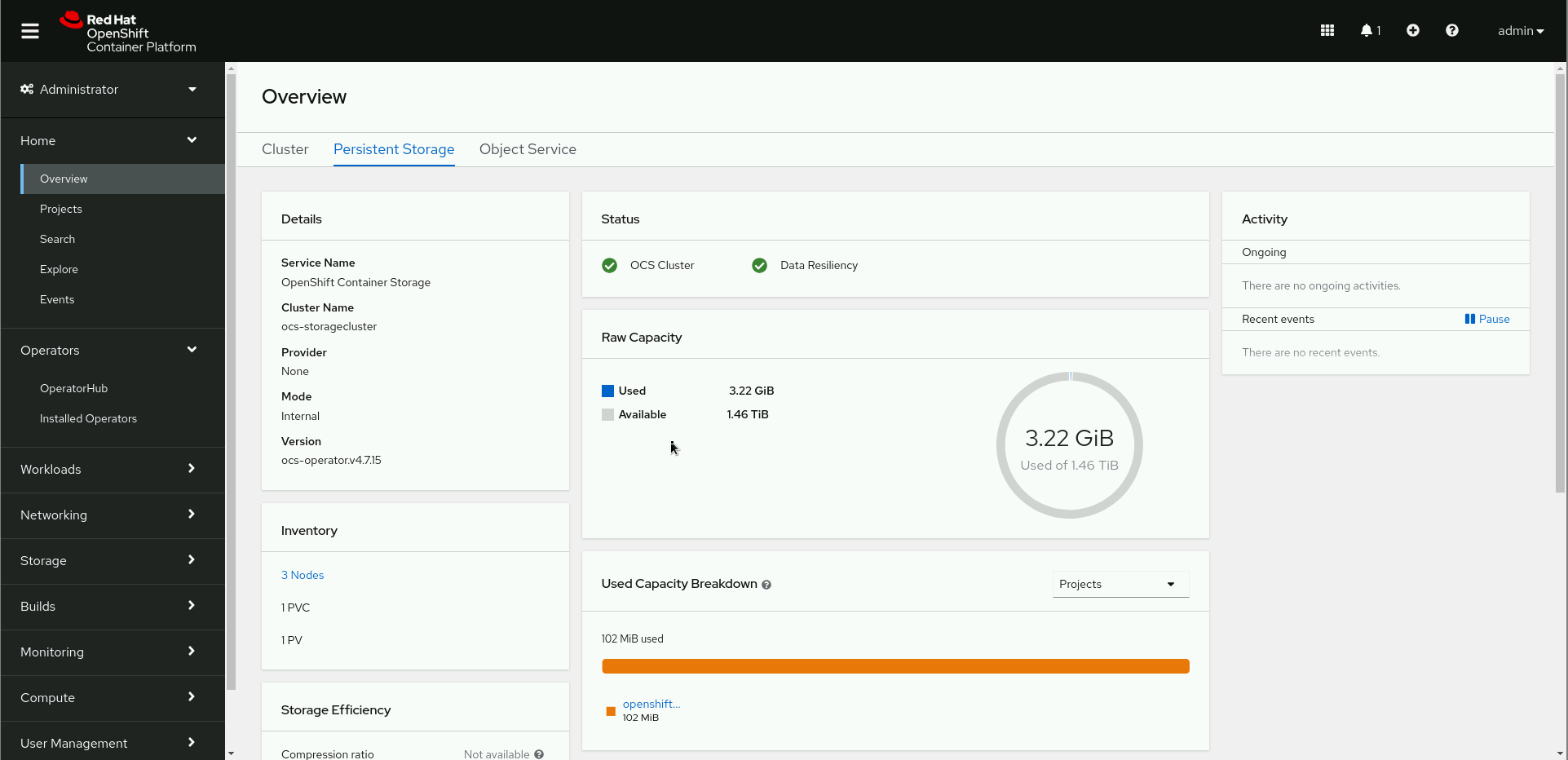

openshift-storage.noobaa.io openshift-storage.noobaa.io/obc Delete Immediate false 53s通过 Dashboard 查看 ODF 的集群状态:

通过 Ceph 客户端查看集群状态:

oc exec -it -n openshift-storage rook-ceph-operator-7df548cc9-gb8tr -- /bin/bash

bash-4.4$ ceph -c /var/lib/rook/openshift-storage/openshift-storage.config -s

cluster:

id: 43d582a9-10cd-4830-8ad7-fbf45a04598c

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 6m)

mgr: a(active, since 6m)

mds: ocs-storagecluster-cephfilesystem:1 {0=ocs-storagecluster-cephfilesystem-a=up:active} 1 up:standby-replay

osd: 3 osds: 3 up (since 5m), 3 in (since 5m)

rgw: 1 daemon active (ocs.storagecluster.cephobjectstore.a)

data:

pools: 10 pools, 176 pgs

objects: 328 objects, 127 MiB

usage: 3.2 GiB used, 1.5 TiB / 1.5 TiB avail

pgs: 176 active+clean

io:

client: 852 B/s rd, 3.7 KiB/s wr, 1 op/s rd, 0 op/s wr

bash-4.4$ ceph -c /var/lib/rook/openshift-storage/openshift-storage.config osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 1.46489 root default

-3 0.48830 host worker01

0 hdd 0.48830 osd.0 up 1.00000 1.00000

-7 0.48830 host worker02

2 hdd 0.48830 osd.2 up 1.00000 1.00000

-5 0.48830 host worker03

1 hdd 0.48830 osd.1 up 1.00000 1.00000